Guilty article in PNAS (2003).

Just a quick pointer to a pair of posts on how sub-optimally designed and analyzed fMRI studies can continue to influence the field. Professor Dorothy Bishop of Oxford University posted a scathing analysis of one such paper, in Time for neuroimaging (and PNAS) to clean up its act:

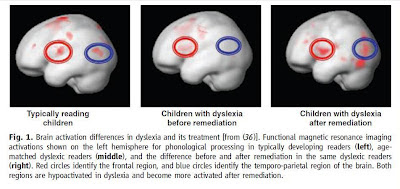

Temple et al (2003) published an fMRI study of 20 children with dyslexia who were scanned both before and after a computerised intervention (FastForword) designed to improve their language. The article in question was published in the Proceedings of the National Academy of Sciences, and at the time of writing has had 270 citations. I did a spot check of fifty of those citing articles to see if any had noted problems with the paper: only one of them did so.Bishop noted at least four major problems in the paper that invalidated the conclusions, including:

- The authors presented uncorrected whole brain activation data. This is not explicitly stated but can be deduced from the z-scores and p-values. Russell Poldrack, who happens to be one of the authors of this paper, has written eloquently on this subject: “…it is critical to employ accurate corrections for multiple tests, since a large number of voxels will generally be significant by chance if uncorrected statistics are used. .. The problem of multiple comparisons is well known but unfortunately many journals still allow publication of results based on uncorrected whole-brain statistics.” Conclusion 2 is based on uncorrected p-values and is not valid.

Indeed, Dr. Russ Poldrack of the University of Texas at Austin is a leader in neuroimaging methodology and a vocal critic of shoddy design and overblown interpretations. And yes, he was an author on the 2003 paper in question. Poldrack replied to Bishop in his own blog:

Skeletons in the closet As someone who has thrown lots of stones in recent years, it's easy to forget that anyone who publishes enough will end up with some skeletons in their closet. I was reminded of that fact today, when Dorothy Bishop posted a detailed analysis of a paper that was published in 2003 on which I am a coauthor.I'm not convinced that every prolific scientist has skeletons in his/her closet, but it was nice to see that Poldrack acknowledged this particular bag of bones:

Dorothy notes four major problems with the study:Looking back at the paper, I see that Dorothy is absolutely right on each of these points. In defense of my coauthors, I would note that points 2-4 were basically standard practice in fMRI analysis 10 years ago (and still crop up fairly often today). Ironically, I raised two of of these issues in my recent paper for the special issue of Neuroimage celebrating the 20th anniversary of fMRI, in talking about the need for increased methodological rigor...

- There was no dyslexic control group; thus, we don't know whether any improvements over time were specific to the treatment, or would have occurred with a control treatment or even without any treatment.

- The brain imaging data were thresholded using an uncorrected threshold.

- One of the main conclusions (the "normalization" of activation following training") is not supported by the necessary interaction statistic, but rather by a visual comparison of maps.

- The correlation between changes in language scores and activation was reported for only one of the many measures, and it appeared to have been driven by outliers.

But are old school methods entirely to blame? I don't think so. It seems that some of these errors are errors in basic statistics. At any rate, I highly recommend that you read these two posts.

Many questions remain. How self-correcting is the field? What should we do with old (and not-so-old) articles that are fatally flawed? How many of these results have replicated, or failed to replicate? Should we put warning labels on the failures?

Professor Bishop also noted specific problems at PNAS, like the "contributed by" track allowing academy members to publish with little or no peer review. The "pre-arranged editor" track is another potential issue. I suggest a series of warning labels for such articles, such as the one shown below.

No comments:

Post a Comment